Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

By default, Mayastor allows the creation of three etcd members. If you wish to increase the number of etcd replicas, you will encounter an error. However, you can make the necessary configuration changes discussed in this guide to make it work.

StatefulSets are Kubernetes resources designed for managing stateful applications. They provide stable network identities and persistent storage for pods. StatefulSets ensure ordered deployment and scaling, support persistent volume claims, and manage the state of applications. They are commonly used for databases, messaging systems, and distributed file systems. Here's how StatefulSets function:

For a StatefulSet with N replicas, when pods are deployed, they are created sequentially in order from {0..N-1}.

When pods are deleted, they are terminated in reverse order from {N-1..0}.

Before a scaling operation is applied to a pod, all of its predecessors must be running and ready.

Before a pod is terminated, all of its successors must be completely shut down.

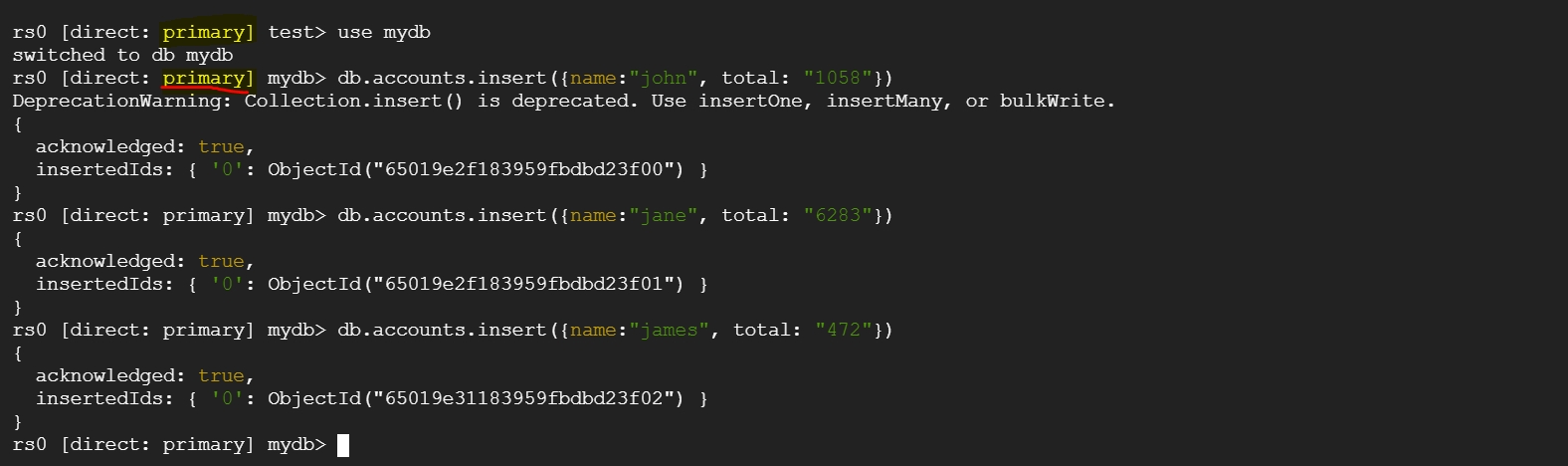

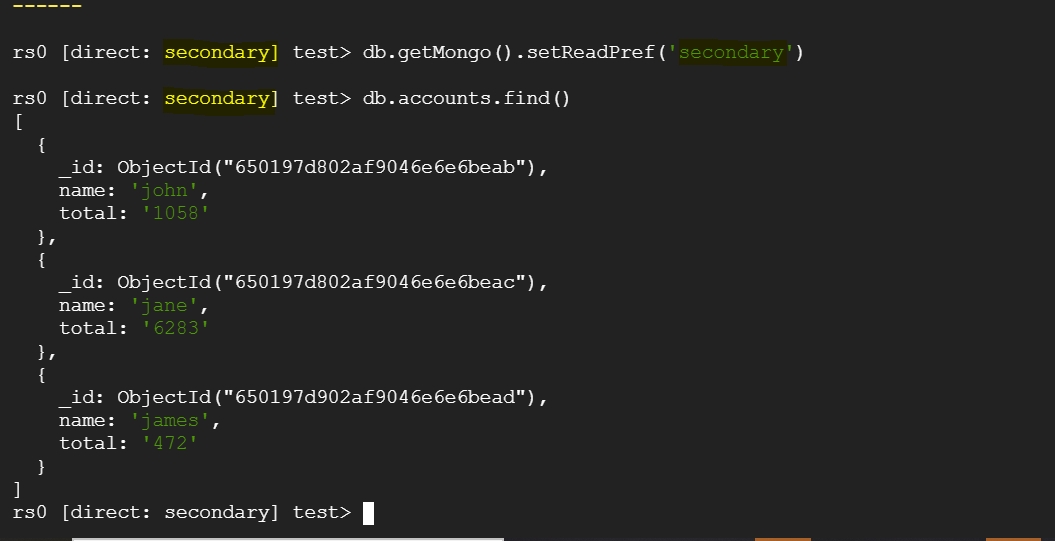

From etcd-0/1/2, we can see that all the values are registered in the database. Once we scale up etcd with "n" replicas, all the key-value pairs should be available across all the pods.

To scale up the etcd members, the following steps can be performed:

Add a new etcd member

Add a peer URL

Create a PV (Persistent Volume)

Validate key-value pairs

To increase the number of replicas to 4, use the following kubectl scale command:

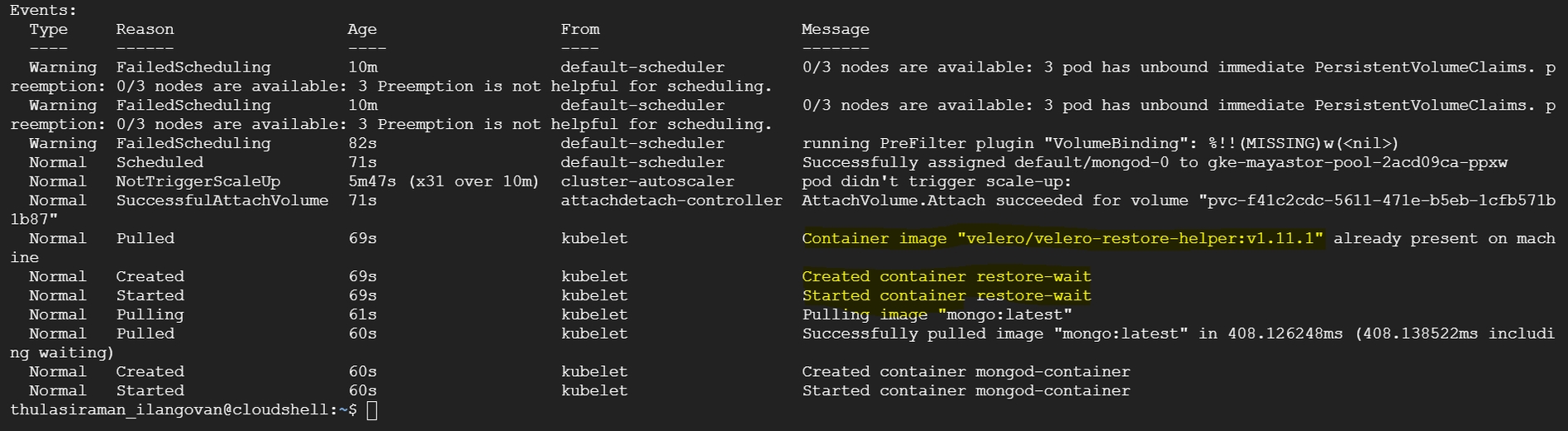

The new pod will be created on available nodes but will be in a pending state as there is no PV/PVC created to bind the volumes.

Before creating a PV, we need to add the new peer URL (mayastor-etcd-3=http://mayastor-etcd-3.mayastor-etcd-headless.mayastor.svc.cluster.local:2380) and change the cluster's initial state from "new" to "existing" so that the new member will be added to the existing cluster when the pod comes up after creating the PV. Since the new pod is still in a pending state, the changes will not be applied to the other pods as they will be restarted in reverse order from {N-1..0}. It is expected that all of its predecessors must be running and ready.

Create a PV with the following YAML. Change the pod name/claim name based on the pod's unique identity.

Run the following command from the new etcd pod and ensure that the values are the same as those in etcd-0/1/2. Otherwise, it indicates a data loss issue.

Mayastor uses etcd database for persisting configuration and state information. Etcd is setup as a Kubernetes StatefulSet when Mayastor is installed.

kubectl get dsp -n mayastorNAME NODE STATE POOL_STATUS CAPACITY USED AVAILABLE

pool-0 worker-0 Online Online 374710730752 22561161216 352149569536

pool-1 worker-1 Online Online 374710730752 21487419392 353223311360

pool-2 worker-2 Online Online 374710730752 21793603584 352917127168kubectl scale sts mayastor-etcd -n mayastor --replicas=4statefulset.apps/mayastor-etcd scaledkubectl get pods -n mayastor -l app=etcdNAME READY STATUS RESTARTS AGE

mayastor-etcd-0 1/1 Running 0 28d

mayastor-etcd-1 1/1 Running 0 28d

mayastor-etcd-2 1/1 Running 0 28d

mayastor-etcd-3 0/1 Pending 0 2m34skubectl edit sts mayastor-etcd -n mayastor - name: ETCD_INITIAL_CLUSTER_STATE

value: existing

- name: ETCD_INITIAL_CLUSTER

value: mayastor-etcd-0=http://mayastor-etcd-0.mayastor-etcd-headless.mayastor.svc.cluster.local:2380,mayastor-etcd-1=http://mayastor-etcd-1.mayastor-etcd-headless.mayastor.svc.cluster.local:2380,mayastor-etcd-2=http://mayastor-etcd-2.mayastor-etcd-headless.mayastor.svc.cluster.local:2380,mayastor-etcd-3=http://mayastor-etcd-3.mayastor-etcd-headless.mayastor.svc.cluster.local:2380apiVersion: v1

kind: PersistentVolume

metadata:

annotations:

meta.helm.sh/release-name: mayastor

meta.helm.sh/release-namespace: mayastor

pv.kubernetes.io/bound-by-controller: "yes"

finalizers:

- kubernetes.io/pv-protection

labels:

app.kubernetes.io/managed-by: Helm

statefulset.kubernetes.io/pod-name: mayastor-etcd-3

name: etcd-volume-3

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 2Gi

claimRef:

apiVersion: v1

kind: PersistentVolumeClaim

name: data-mayastor-etcd-3

namespace: mayastor

hostPath:

path: /var/local/mayastor/etcd/pod-3

type: ""

persistentVolumeReclaimPolicy: Delete

storageClassName: manual

volumeMode: Filesystemkubectl apply -f pv-etcd.yaml -n mayastor

persistentvolume/etcd-volume-3 createdkubectl exec -it mayastor-etcd-3 -n mayastor -- bash

#ETCDCTL_API=3

#etcdctl get --prefix ""This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

The supportability tool collects Mayastor specific information from the cluster using the command-line tool. It uses the dump command, which interacts with the Mayastor services to build an archive (ZIP) file that acts as a placeholder for the bundled information.

To bundle Mayastor's complete system information, execute:

To view all the available options and sub-commands that can be used with the dump command, execute:

The archive files generated by the dump command are stored in the specified output directories. The tables below specify the path and the content that will be stored in each archive file.

The supportability tool generates support bundles, which are used for debugging purposes. These bundles are created in response to the user's invocation of the tool and can be transmitted only by the user. Below is the information collected by the supportability tool that might be identified as 'sensitive' based on the organization's data protection/privacy commitments and security policies. Logs: The default installation of Mayastor includes the deployment of a log aggregation subsystem based on Grafana Loki. All the pods deployed in the same namespace as Mayastor and labelled with openebs.io/logging=true will have their logs incorporated within this centralized collector. These logs may include the following information:

Kubernetes (K8s) node hostnames

IP addresses

container addresses

API endpoints

K8s Events: The archive files generated by the supportability tool contain information on all the events of the Kubernetes cluster present in the same namespace as Mayastor.

etcd Dump: The default installation of Mayastor deploys an etcd instance for its exclusive use. This key-value pair is used to persist state information for Mayastor-managed objects. These key-value pairs are required for diagnostic and troubleshooting purposes. The etcd dump archive file consists of the following information:

Kubernetes node hostnames

IP addresses

PVC/PV names

Container names

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

Volume restore from an existing snapshot will create an exact replica of a storage volume captured at a specific point in time. They serve as an essential tool for data protection, recovery, and efficient management in Kubernetes environments. This article provides a step-by-step guide on how to create a volume restore.

To begin, you'll need to create a StorageClass that defines the properties of the snapshot to be restored. Refer to for more details. Use the following command to create the StorageClass:

Note the name of the StorageClass, which, in this example, is mayastor-1-restore.

You need to create a volume snapshot before proceeding with the restore. Follow the steps outlined in to create a volume snapshot.

Note the snapshot's name, for example, pvc-snap-1.

After creating a snapshot, you can create a PersistentVolumeClaim (PVC) from it to generate the volume restore. Use the following command:

By running this command, you create a new PVC named restore-pvc based on the specified snapshot. The restored volume will have the same data and configuration as the original volume had at the time of the snapshot.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

When a Mayastor volume is provisioned based on a StorageClass which has a replication factor greater than one (set by its repl parameter), the control plane will attempt to maintain through a 'Kubernetes-like' reconciliation loop that number of identical copies of the volume's data "replicas" (a replica is a nexus target "child") at any point in time. When a volume is first provisioned the control plane will attempt to create the required number of replicas, whilst adhering to its internal heuristics for their location within the cluster (which will be discussed shortly). If it succeeds, the volume will become available and will bind with the PVC. If the control plane cannot identify a sufficient number of eligble Mayastor Pools in which to create required replicas at the time of provisioning, the operation will fail; the Mayastor Volume will not be created and the associated PVC will not be bound. Kubernetes will periodically re-try the volume creation and if at any time the appropriate number of pools can be selected, the volume provisioning should succeed.

Once a volume is processing I/O, each of its replicas will also receive I/O. Reads are round-robin distributed across replicas, whilst writes must be written to all. In a real world environment this is attended by the possibility that I/O to one or more replicas might fail at any time. Possible reasons include transient loss of network connectivity, node reboots, node or disk failure. If a volume's nexus (NVMe controller) encounters 'too many' failed I/Os for a replica, then that replica's child status will be marked Faulted and it will no longer receive I/O requests from the nexus. It will remain a member of the volume, whose departure from the desired state with respect to replica count will be reflected with a volume status of Degraded. How many I/O failures are considered "too many" in this context is outside the scope of this discussion.

The control plane will first 'retire' the old, faulted one which will then no longer be associated to the volume. Once retired, a replica will become available for garbage collection (deletion from the Mayastor Pool containing it), assuming that the nature of the failure was such that the pool itself is still viable (i.e. the underlying disk device is still accessible). Then it will attempt to restore the desired state (replica count) by creating a new replica, following its replica placement rules. If it succeeds, the nexus will "rebuild" that new replica - performing a full copy of all data from a healthy replica Online, i.e. the source. This process can proceed whilst the volume continues to process application I/Os although it will contend for disk throughput at both the source and destination disks.

If a nexus is cleanly restarted, i.e. the Mayastor pod hosting it restarts gracefully, with the assistance of the control plane it will 'recompose' itself; all of the previous healthy member replicas will be re-attached to it. If previously faulted replicas are available to be re-connected (Online), then the control plane will attempt to reuse and rebuild them directly, rather than seek replacements for them first. This edge-case therefore does not result in the retirement of the affected replicas; they are simply reused. If the rebuild fails then we follow the above process of removing a Faulted replica and adding a new one. On an unclean restart (i.e. the Mayastor pod hosting the nexus crashes or is forcefully deleted) only one healthy replica will be re-attached and all other replicas will eventually be rebuilt.

Once provisioned, the replica count of a volume can be changed using the kubectl-mayastor plugin scale subcommand. The value of the num_replicas field may be either increased or decreased by one and the control plane will attempt to satisfy the request by creating or destroying a replicas as appropriate, following the same replica placement rules as described herein. If the replica count is reduced, faulted replicas will be selected for removal in preference to healthy ones.

Accurate predictions of the behaviour of Mayastor with respect to replica placement and management of replica faults can be made by reference to these 'rules', which are a simplified representation of the actual logic:

"Rule 1": A volume can only be provisioned if the replica count (and capacity) of its StorageClass can be satisfied at the time of creation

"Rule 2": Every replica of a volume must be placed on a different Mayastor Node)

"Rule 3": Children with the state Faulted are always selected for retirement in preference to those with state Online

N.B.: By application of the 2nd rule, replicas of the same volume cannot exist within different pools on the same Mayastor Node.

A cluster has two Mayastor nodes deployed, "Node-1" and "Node-2". Each Mayastor node hosts two Mayastor pools and currently, no Mayastor volumes have been defined. Node-1 hosts pools "Pool-1-A" and "Pool-1-B", whilst Node-2 hosts "Pool-2-A and "Pool-2-B". When a user creates a PVC from a StorageClass which defines a replica count of 2, the Mayastor control plane will seek to place one replica on each node (it 'follows' Rule 2). Since in this example it can find a suitable candidate pool with sufficient free capacity on each node, the volume is provisioned and becomes "healthy" (Rule 1). Pool-1-A is selected on Node-1, and Pool-2-A selected on Node-2 (all pools being of equal capacity and replica count, in this initial 'clean' state).

Sometime later, the physical disk of Pool-2-A encounters a hardware failure and goes offline. The volume is in use at the time, so its nexus (NVMe controller) starts to receive I/O errors for the replica hosted in that Pool. The associated replica's child from Pool-2-A enters the Faulted state and the volume state becomes Degraded (as seen through the kubectl-mayastor plugin).

Expected Behaviour: The volume will maintain read/write access for the application via the remaining healthy replica. The faulty replica from Pool-2-A will be removed from the Nexus thus changing the nexus state to Online as the remaining is healthy. A new replica is created on either Pool-2-A or Pool-2-B and added to the nexus. The new replica child is rebuilt and eventually the state of the volume returns to Online.

A cluster has three Mayastor nodes deployed, "Node-1", "Node-2" and "Node-3". Each Mayastor node hosts one pool: "Pool-1" on Node-1, "Pool-2" on Node-2 and "Pool-3" on Node-3. No Mayastor volumes have yet been defined; the cluster is 'clean'. A user creates a PVC from a StorageClass which defines a replica count of 2. The control plane determines that it is possible to accommodate one replica within the available capacity of each of Pool-1 and Pool-2, and so the volume is created. An application is deployed on the cluster which uses the PVC, so the volume receives I/O.

Unfortunately, due to user error the SAN LUN which is used to persist Pool-2 becomes detached from Node-2, causing I/O failures in the replica which it hosts for the volume. As with scenario one, the volume state becomes Degraded and the faulted child's becomes Faulted.

Expected Behaviour: Since there is a Mayastor pool on Node-3 which has sufficient capacity to host a replacement replica, a new replica can be created (Rule 2: this 'third' incoming replica isn't located on either of the nodes that the two original ones are). The faulted replica in Pool-2 is retired from the nexus and a new replica is created on Pool-3 and added to the nexus. The new replica is rebuilt and eventually the state of the volume returns to Online.

In the cluster from Scenario three, sometime after the Mayastor volume has returned to the Online state, a user scales up the volume, increasing the num_replicas value from 2 to 3. Before doing so they corrected the SAN misconfiguration and ensured that the pool on Node-2 was Online.

Expected Behaviour: The control plane will attempt to reconcile the difference in current (replicas = 2) and desired (replicas = 3) states. Since Node-2 no longer hosts a replica for the volume (the previously faulted replica was successfully retired and is no longer a member of the volume's nexus), the control plane will select it to host the new replica required (Rule 2 permits this). The volume state will become initially Degraded to reflect the difference in actual vs required redundant data copies but a rebuild of the new replica will be performed and eventually the volume state will be Online again.

A cluster has three Mayastor nodes deployed; "Node-1", "Node-2" and "Node-3". Each Mayastor node hosts two Mayastor pools and currently, no Mayastor volumes have been defined. Node-1 hosts pools "Pool-1-A" and "Pool-1-B", whilst Node-2 hosts "Pool-2-A and "Pool-2-B" and Node-3 hosts "Pool-3-A" and "Pool-3-B". A single volume exists in the cluster, which has a replica count of 3. The volume's replicas are all healthy and are located on Pool-1-A, Pool-2-A and Pool-3-A. An application is using the volume, so all replicas are receiving I/O.

The host Node-3 goes down causing failure of all I/O sent to the replica it hosts from Pool-3-A.

Expected Behaviour: The volume will enter and remain in the Degraded state. The associated child from the replica from Pool-3-A will be in the state Faulted, as observed in the volume through the kubectl-mayastor plugin. Said replica will be removed from the Nexus thus changing the nexus state to Online as the other replicas are healthy. The replica will then be disowned from the volume (it won't be possible to delete it since the host is down). Since Rule 2 dictates that every replica of a volume must be placed on a different Mayastor Node no new replica can be created at this point and the volume remains Degraded indefinitely.

Given the post-host failure situation of Scenario four, the user scales down the volume, reducing the value of num_replicas from 3 to 2.

Expected Behaviour: The control plane will reconcile the actual (replicas=3) vs desired (replicas=2) state of the volume. The volume state will become Online again.

In scenario Five, after scaling down the Mayastor volume the user waits for the volume state to become Online again. The desired and actual replica count are now 2. The volume's replicas are located in pools on both Node-1 and Node-2. The Node-3 is now back up and its pools Pool-3-A and Pool-3-B are Online. The user then scales the volume again, increasing the num_replicas from 2 to 3 again.

Expected Behaviour: The volume's state will become Degraded, reflecting the difference in desired vs actual replica count. The control plane will select a pool on Node-3 as the location for the new replica required. Node-3 is therefore again a suitable candidate and has online pools with sufficient capacity.

./topology/volume

volume

volume-01

volume-01-topology.json

Topology information of volume-01 (All volume topologies will available here)

./logs/csi-controller

-

csi-controller

loki-csi-controller.log

./logs/csi-controller

-

csi-provisioner

loki-csi-provisioner.log

./logs/diskpool-operator

-

operator-diskpool

loki-operator-disk-pool.log

./logs/mayastor

node-02

csi-driver-registrar

node-02-loki-csi-driver-registrar.log

./logs/mayastor

node-01

csi-node

node-01-loki-csi-node.log

./logs/mayastor

node-01

io-engine

node-01-loki-mayastor.log

./logs/blot

node-02

io-engine

node-02-loki-mayastor.log

./logs/etcd

node-03

etcd

node-03-loki-etcd.log

./k8s_resources/configurations/

etcd (Statefullset)

mayastor-etcd.yaml

./k8s_resources/configurations/

loki (Statefullset)

mayastor-loki-yaml

./k8s_resources/configurations/

operator-diskpool

mayastor-operator-disk-pool.yaml

./k8s_resources/configurations/

promtail(Daemonset)

mayastor-promtail.yaml

./k8s_resources/configurations/

io-engine (Daemonset)

io-engine.yaml

./k8s_resources/configurations/

disk_pools

k8s_diskPools.yaml

./k8s_resources

events

k8s_events.yaml

./k8s_resources/configurations/

all pods(deployed under the same namespace as Mayastor)

pods.yaml

./k8s_resources

volume snapshot classes

volume_snapshot_classes.yaml

./k8s_resources

volume snapshot contents

volume_snapshot_contents.yaml

Mayastor

K8s

Container names

K8s Persistent Volume names (provisioned by Mayastor)

DiskPool names

Block device details (except the content) K8s Definition Files: The support bundle includes definition files for all the Mayastor components. Some of these are listed below:

Deployments

DaemonSets

StatefulSets

VolumeSnapshotClass

VolumeSnapshotContent

Mayastor

User applications within the mayastor namespace

Block device details (except data content)

./topology/node

node

node-01

node-01-topology.json

Topology of node-01(All node topologies will available here)

./topology/pool

pool

pool-01

pool-01-topology.json

Topology of pool-01 (All pool topologies will available here)

./logs/core-agents

-

agent-core

loki-agent-core.log

./logs/rest

-

api-rest

loki-api-rest.log

./logs/csi-controller

-

csi-attacher

./k8s_resources/configurations/

agent-core (Deployment)

mayastor-agent-core.yaml

./k8s_resources/configurations/

api-rest

mayastor-api-rest.yaml

./k8s_resources/configurations/

si-controller (Deployment)

mayastor-csi-controller.yaml

./k8s_resources/configurations/

csi-node(Daemonset)

./

etcd

etcd_dump

./

Support-tool

support_tool_logs.log

kubectl mayastor dump --help`Dump` resources

Usage: kubectl-mayastor dump [OPTIONS] <COMMAND>

Commands:

system Collects entire system information

etcd Collects information from etcd

help Print this message or the help for the given subcommand(s)

Options:

-r, --rest <REST>

The rest endpoint to connect to

-t, --timeout <TIMEOUT>

Specifies the timeout value to interact with other modules of system [default: 10s]

-k, --kube-config-path <KUBE_CONFIG_PATH>

Path to kubeconfig file

-s, --since <SINCE>

Period states to collect all logs from last specified duration [default: 24h]

-l, --loki-endpoint <LOKI_ENDPOINT>

LOKI endpoint, if left empty then it will try to parse endpoint from Loki service(K8s service resource), if the tool is unable to parse from service then logs will be collected using Kube-apiserver

-e, --etcd-endpoint <ETCD_ENDPOINT>

Endpoint of ETCD service, if left empty then will be parsed from the internal service name

-d, --output-directory-path <OUTPUT_DIRECTORY_PATH>

Output directory path to store archive file [default: ./]

-n, --namespace <NAMESPACE>

Kubernetes namespace of mayastor service [default: mayastor]

-o, --output <OUTPUT>

The Output, viz yaml, json [default: none]

-j, --jaeger <JAEGER>

Trace rest requests to the Jaeger endpoint agent

-h, --help

Print help

Supportability - collects state & log information of services and dumps it to a tar file. loki-csi-attacher.log

mayastor-csi-node.yaml

cat <<EOF | kubectl create -f -

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: mayastor-1-restore

parameters:

ioTimeout: "30"

protocol: nvmf

repl: "1"

thin: "true"

provisioner: io.openebs.csi-mayastor

EOFapiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: mayastor-1-restore

parameters:

ioTimeout: "30"

protocol: nvmf

repl: "1"

thin: "true"

provisioner: io.openebs.csi-mayastorkubectl mayastor dump system -n mayastor -d <output_directory_path>cat <<EOF | kubectl create -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: restore-pvc //add a name for your new volume

spec:

storageClassName: mayastor-1-restore //add your storage class name

dataSource:

name: pvc-snap-1 //add your volumeSnapshot name

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

EOF apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: restore-pvc //add a name for your new volume

spec:

storageClassName: mayastor-1-restore //add your storage class name

dataSource:

name: pvc-snap-1 //add your volumeSnapshot name

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10GiThis website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

Volume snapshots are copies of a persistent volume at a specific point in time. They can be used to restore a volume to a previous state or create a new volume. Mayastor provides support for industry standard copy-on-write (COW) snapshots, which is a popular methodology for taking snapshots by keeping track of only those blocks that have changed. Mayastor incremental snapshot capability enhances data migration and portability in Kubernetes clusters across different cloud providers or data centers. Using standard kubectl commands, you can seamlessly perform operations on snapshots and clones in a fully Kubernetes-native manner.

Use cases for volume snapshots include:

Efficient replication for backups.

Utilization of clones for troubleshooting.

Development against a read-only copy of data.

Volume snapshots allow the creation of read-only incremental copies of volumes, enabling you to maintain a history of your data. These volume snapshots possess the following characteristics:

Consistency: The data stored within a snapshot remains consistent across all replicas of the volume, whether local or remote.

Immutability: Once a snapshot is successfully created, the data contained within it cannot be modified.

Currently, Mayastor supports the following operations related to volume snapshots:

Creating a snapshot for a PVC

Listing available snapshots for a PVC

Deleting a snapshot for a PVC

Deploy and configure Mayastor by following the steps given and create disk pools.

Create a Mayastor StorageClass with single replica.

Create a PVC using steps and check if the status of the PVC is Bound.

Copy the PVC name, for example,

ms-volume-claim.

(Optional) Create an application by following steps.

You can create a snapshot (with or without an application) using the PVC. Follow the steps below to create a volume snapshot:

Apply VolumeSnapshotClass details

Apply the snapshot

To retrieve the details of the created snapshots, use the following command:

To delete a snapshot, use the following command:

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

Mayastor will fully utilize each CPU core that it was configured to run on. It will spawn a thread on each and the thread will run in an endless loop serving tasks dispatched to it without sleeping or blocking. There are also other Mayastor threads that are not bound to the CPU and those are allowed to block and sleep. However, the bound threads (also called reactors) rely on being interrupted by the kernel and other userspace processes as little as possible. Otherwise, the latency of IO may suffer.

Ideally, the only thing that interrupts Mayastor's reactor would be only kernel time-based interrupts responsible for CPU accounting. However, that is far from trivial. isolcpus option that we will be using does not prevent:

kernel threads and

other k8s pods to run on the isolated CPU

However, it prevents system services including kubelet from interfering with Mayastor.

Note that the best way to accomplish this step may differ, based on the Linux distro that you are using.

Add the isolcpus kernel boot parameter to GRUB_CMDLINE_LINUX_DEFAULT in the grub configuration file, with a value which identifies the CPUs to be isolated (indexing starts from zero here). The location of the configuration file to change is typically /etc/default/grub but may vary. For example when running Ubuntu 20.04 in AWS EC2 Cloud boot parameters are in /etc/default/grub.d/50-cloudimg-settings.cfg.

In the following example we assume a system with 4 CPU cores in total, and that the third and the fourth CPU cores are to be dedicated to Mayastor.

Basic verification is by outputting the boot parameters of the currently running kernel:

You can also print a list of isolated CPUs:

To allot specific CPU cores for Mayastor's reactors, follow these steps:

Ensure that you have the Mayastor kubectl plugin installed, matching the version of your Mayastor Helm chart deployment (). You can find installation instructions in the .

Execute the following command to update Mayastor's configuration. Replace <namespace> with the appropriate Kubernetes namespace where Mayastor is deployed.

In the above command, io_engine.coreList={2,3} specifies that Mayastor's reactors should operate on the third and fourth CPU cores.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

Mayastor 2.0 enhances High Availability (HA) of the volume target with the nexus switch-over feature. In the event of the target failure, the switch-over feature quickly detects the failure and spawns a new nexus to ensure I/O continuity. The HA feature consists of two components: the HA node agent (which runs in each csi- node) and the cluster agent (which runs alongside the agent-core). The HA node agent looks for io-path failures from applications to their corresponding targets. If any such broken path is encountered, the HA node agent informs the cluster agent. The cluster-agent then creates a new target on a different (live) node. Once the target is created, the node-agent establishes a new path between the application and its corresponding target. The HA feature restores the broken path within seconds, ensuring negligible downtime.

The volume's replica count must be higher than 1 for a new target to be established as part of switch-over.

The HA feature is enabled by default; to disable it, pass the parameter --set=agents.ha.enabled=false with the helm install command.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

This quickstart guide describes the actions necessary to perform a basic installation of Mayastor on an existing Kubernetes cluster, sufficient for evaluation purposes. It assumes that the target cluster will pull the Mayastor container images directly from OpenEBS public container repositories. Where preferred, it is also possible to build Mayastor locally from source and deploy the resultant images but this is outside of the scope of this guide.

Deploying and operating Mayastor in production contexts requires a foundational knowledge of Mayastor internals and best practices, found elsewhere within this documentation.

Name

String

Custom name of the snapshot class

Driver

String

CSI provisioner of the storage provider being requested to create a snapshot (io.openebs.csi-mayastor)

Name

String

Name of the snapshot

VolumeSnapshotClassName

String

Name of the created snapshot class

PersistentVolumeClaimName

String

Name of the PVC. Example- ms-volume-claim

Sourcing file `/etc/default/grub'

Sourcing file `/etc/default/grub.d/40-force-partuuid.cfg'

Sourcing file `/etc/default/grub.d/50-cloudimg-settings.cfg'

Sourcing file `/etc/default/grub.d/init-select.cfg'

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.8.0-29-generic

Found initrd image: /boot/microcode.cpio /boot/initrd.img-5.8.0-29-generic

Found linux image: /boot/vmlinuz-5.4.0-1037-aws

Found initrd image: /boot/microcode.cpio /boot/initrd.img-5.4.0-1037-aws

Found Ubuntu 20.04.2 LTS (20.04) on /dev/xvda1

donesudo update-grubcat <<EOF | kubectl create -f -

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: mayastor-1

parameters:

ioTimeout: "30"

protocol: nvmf

repl: "1"

provisioner: io.openebs.csi-mayastor

EOFapiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: mayastor-1

parameters:

ioTimeout: "30"

protocol: nvmf

repl: "1"

provisioner: io.openebs.csi-mayastorkubectl get pvcNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ms-volume-claim Bound pvc-fe1a5a16-ef70-4775-9eac-2f9c67b3cd5b 1Gi RWO mayastor-1 15scat <<EOF | kubectl create -f -

kind: VolumeSnapshotClass

apiVersion: snapshot.storage.k8s.io/v1

metadata:

name: csi-mayastor-snapshotclass

annotations:

snapshot.storage.kubernetes.io/is-default-class: "true"

driver: io.openebs.csi-mayastor

deletionPolicy: Delete

EOFkind: VolumeSnapshotClass

apiVersion: snapshot.storage.k8s.io/v1

metadata:

name: csi-mayastor-snapshotclass

annotations:

snapshot.storage.kubernetes.io/is-default-class: "true"

driver: io.openebs.csi-mayastor

deletionPolicy: Deletekubectl apply -f class.yamlvolumesnapshotclass.snapshot.storage.k8s.io/csi-mayastor-snapshotclass createdcat <<EOF | kubectl create -f -

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: mayastor-pvc-snap-1

spec:

volumeSnapshotClassName: csi-mayastor-snapshotclass

source:

persistentVolumeClaimName: ms-volume-claim

EOFapiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: mayastor-pvc-snap-1

spec:

volumeSnapshotClassName: csi-mayastor-snapshotclass

source:

persistentVolumeClaimName: ms-volume-claim kubectl apply -f snapshot.yamlvolumesnapshot.snapshot.storage.k8s.io/mayastor-pvc-snap-1 createdkubectl get volumesnapshot NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

mayastor-pvc-snap-1 true ms-volume-claim 1Gi csi-mayastor-snapshotclass snapcontent-174d9cd9-dfb2-4e53-9b56-0f3f783518df 57s 57skubectl get volumesnapshotcontentNAME READYTOUSE RESTORESIZE DELETIONPOLICY DRIVER VOLUMESNAPSHOTCLASS VOLUMESNAPSHOT VOLUMESNAPSHOTNAMESPACE AGE

snapcontent-174d9cd9-dfb2-4e53-9b56-0f3f783518df true 1073741824 Delete io.openebs.csi-mayastor csi-mayastor-snapshotclass mayastor-pvc-snap-1 default 87skubectl delete volumesnapshot mayastor-pvc-snap-1 volumesnapshot.snapshot.storage.k8s.io "mayastor-pvc-snap-1" deletedGRUB_CMDLINE_LINUX_DEFAULT="quiet splash isolcpus=2,3"sudo rebootcat /proc/cmdlineBOOT_IMAGE=/boot/vmlinuz-5.8.0-29-generic root=PARTUUID=7213a253-01 ro console=tty1 console=ttyS0 nvme_core.io_timeout=4294967295 isolcpus=2,3 panic=-1cat /sys/devices/system/cpu/isolated2-3kubectl mayastor upgrade -n <namespace> --set-args 'io_engine.coreList={2,3}'This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

For basic test and evaluation purposes it may not always be practical or possible to allocate physical disk devices on a cluster to Mayastor for use within its pools. As a convenience, Mayastor supports two disk device type emulations for this purpose:

Memory-Backed Disks ("RAM drive")

File-Backed Disks

Memory-backed Disks are the most readily provisioned if node resources permit, since Mayastor will automatically create and configure them as it creates the corresponding pool. However they are the least durable option - since the data is held entirely within memory allocated to a Mayastor pod, should that pod be terminated and rescheduled by Kubernetes, that data will be lost. Therefore it is strongly recommended that this type of disk emulation be used only for short duration, simple testing. It must not be considered for production use.

File-backed disks, as their name suggests, store pool data within a file held on a file system which is accessible to the Mayastor pod hosting that pool. Their durability depends on how they are configured; specifically on which type of volume mount they are located. If located on a path which uses Kubernetes ephemeral storage (eg. EmptyDir), they may be no more persistent than a RAM drive would be. However, if placed on their own Persistent Volume (eg. a Kubernetes Host Path volume) then they may considered 'stable'. They are slightly less convenient to use than memory-backed disks, in that the backing files must be created by the user as a separate step preceding pool creation. However, file-backed disks can be significantly larger than RAM disks as they consume considerably less memory resource within the hosting Mayastor pod.

Creating a memory-backed disk emulation entails using the "malloc" uri scheme within the Mayastor pool resource definition.

The example shown defines a pool named "mempool-1". The Mayastor pod hosted on "worker-node-1" automatically creates a 64MiB emulated disk for it to use, with the device identifier "malloc0" - provided that at least 64MiB of 2MiB-sized Huge Pages are available to that pod after the Mayastor container's own requirements have been satisfied.

The pool definition caccepts URIs matching the malloc:/// schema within its disks field for the purposes of provisioning memory-based disks. The general format is:

malloc:///malloc<DeviceId>?<parameters>

Where <DeviceId> is an integer value which uniquely identifies the device on that node, and where the parameter collection <parameters> may include the following:

Note: Memory-based disk devices are not over-provisioned and the memory allocated to them is so from the 2MiB-sized Huge Page resources available to the Mayastor pod. That is to say, to create a 64MiB device requires that at least 33 (32+1) 2MiB-sized pages are free for that Mayastor container instance to use. Satisfying the memory requirements of this disk type may require additional configuration on the worker node and changes to the resource request and limit spec of the Mayastor daemonset, in order to ensure that sufficient resource is available.

Mayastor can use file-based disk emulation in place of physical pool disk devices, by employing the aio:/// URI schema within the pool's declaration in order to identify the location of the file resource.

The examples shown seek to create a pool using a file named "disk1.img", located in the /var/tmp directory of the Mayastor container's file system, as its member disk device. For this operation to succeed, the file must already exist on the specified path (which should be FULL path to the file) and this path must be accessible by the Mayastor pod instance running on the corresponding node.

The aio:/// schema requires no other parameters but optionally, "blk_size" may be specified. Block size accepts a value of either 512 or 4096, corresponding to the emulation of either a 512-byte or 4kB sector size device. If this parameter is omitted the device defaults to using a 512-byte sector size.

File-based disk devices are not over-provisioned; to create a 10GiB pool disk device requires that a 10GiB-sized backing file exist on a file system on an accessible path.

The preferred method of creating a backing file is to use the linux truncate command. The following example demonstrates the creation of a 1GiB-sized file named disk1.img within the directory /tmp.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

v1.25.10

v1.23.7

v1.22.10

v1.21.13

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

By following the given steps, you can successfully migrate etcd from one node to another during maintenance activities like node drain etc., ensuring the continuity and integrity of the etcd data.

Assuming we have a three-node cluster with three etcd replicas, verify the etcd pods with the following commands:

Command to verify pods:

From etcd-0/1/2 we could see all the values are registered in database, once we migrated etcd to new node, all the key-value pairs should be available across all the pods. Run the following commands from any etcd pod.

Commands to get etcd data:

In this example, we drain the etcd node worker-0 and migrate it to the next available node (in this case, the worker-4 node), use the following command:

Command to drain the node:

After draining the worker-0 node, the etcd pod will be scheduled on the next available node, which is the worker-4 node.

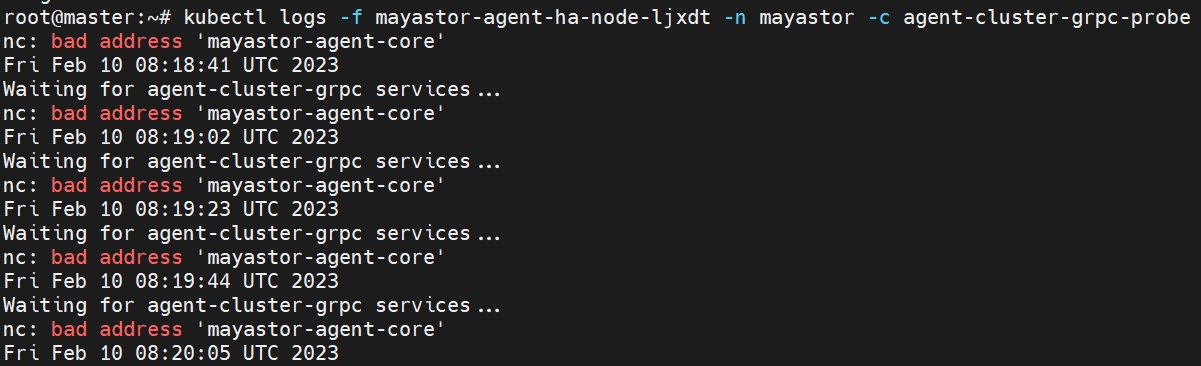

The pod may end up in a CrashLoopBackOff status with specific errors in the logs.

When the pod is scheduled on the new node, it attempts to bootstrap the member again, but since the member is already registered in the cluster, it fails to start the etcd server with the error message member already bootstrapped.

Command to check new etcd pod status

Command to edit the StatefulSet:

Run the appropriate command from the migrated etcd pod to validate the key-value pairs and ensure they are the same as in the existing etcd. This step is crucial to avoid any data loss during the migration process.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

All worker nodes must satisfy the following requirements:

x86-64 CPU cores with SSE4.2 instruction support

(Tested on) Linux kernel 5.15 (Recommended) Linux kernel 5.13 or higher. The kernel should have the following modules loaded:

nvme-tcp

Ensure that the following ports are not in use on the node:

10124: Mayastor gRPC server will use this port.

8420 / 4421: NVMf targets will use these ports.

Disks must be unpartitioned, unformatted, and used exclusively by the DiskPool.

The minimum capacity of the disks should be 10 GB.

Kubernetes core v1 API-group resources: Pod, Event, Node, Namespace, ServiceAccount, PersistentVolume, PersistentVolumeClaim, ConfigMap, Secret, Service, Endpoint, Event.

Kubernetes batch API-group resources: CronJob, Job

Kubernetes apps API-group resources: Deployment, ReplicaSet, StatefulSet, DaemonSet

Kubernetes

The minimum supported worker node count is three nodes. When using the synchronous replication feature (N-way mirroring), the number of worker nodes to which Mayastor is deployed should be no less than the desired replication factor.

Mayastor supports the export and mounting of volumes over NVMe-oF TCP only. Worker node(s) on which a volume may be scheduled (to be mounted) must have the requisite initiator support installed and configured. In order to reliably mount Mayastor volumes over NVMe-oF TCP, a worker node's kernel version must be 5.13 or later and the nvme-tcp kernel module must be loaded.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

The steps and commands which follow are intended only for use in conjunction with Mayastor version(s) 2.1.x and above.

Add the OpenEBS Mayastor Helm repository.

Run the following command to discover all the stable versions of the added chart repository:

Run the following command to install Mayastor _version 2.4.

Verify the status of the pods by running the command:

The native NVMe-oF CAS engine of OpenEBS

It is provided here as a convenience to the writers, reviewers and editors of Mayastor's user documentation, to provide easy visualisation of content before publishing. It MUST NOT be used to guide the installation or use of Mayastor, other than for pre-release testing outside of production. As a staging branch, it is expected at times to contain errors and to be incomplete.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

Mayastor is a performance optimised "Container Attached Storage" (CAS) solution of the CNCF project . The goal of OpenEBS is to extend Kubernetes with a declarative data plane, providing flexible persistent storage for stateful applications.

Design goals for Mayastor include:

Highly available, durable persistence of data

To be readily deployable and easily managed by autonomous SRE or development teams

To be a low-overhead abstraction for NVMe-based storage devices

Mayastor incorporates Intel's . It has been designed from the ground up to leverage the protocol and compute efficiency of NVMe-oF semantics, and the performance capabilities of the latest generation of solid-state storage devices, in order to deliver a storage abstraction with performance overhead measured to be within the range of single-digit percentages.

By comparison, most "shared everything" storage systems are widely thought to impart an overhead of at least 40% (and sometimes as much as 80% or more) as compared to the capabilities of the underlying devices or cloud volumes; additionally traditional shared storage scales in an unpredictable manner as I/O from many workloads interact and compete for resources.

While Mayastor utilizes NVMe-oF it does not require NVMe devices or cloud volumes to operate and can work well with other device types.

Mayastor's source code and documentation are distributed amongst a number of GitHub repositories under the OpenEBS organisation. The following list describes some of the main repositories but is not exhaustive.

: contains the source code of the data plane components

: contains the source code of the control plane components

: contains common protocol buffer definitions and OpenAPI specifications for Mayastor components

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

The objective of this section is to provide the user and evaluator of Mayastor with a topological view of the gross anatomy of a Mayastor deployment. A description will be made of the expected pod inventory of a correctly deployed cluster, the roles and functions of the constituent pods and related Kubernetes resource types, and of the high level interactions between them and the orchestration thereof.

More detailed guides to Mayastor's components, their design and internal structure, and instructions for building Mayastor from source, are maintained within the project's GitHub repository.

The io-engine pod encapsulates Mayastor containers, which implement the I/O path from the block devices at the persistence layer, up to the relevant initiators on the worker nodes mounting volume claims. The Mayastor process running inside this container performs four major functions:

Creates and manages DiskPools hosted on that node.

Creates, exports, and manages volume controller objects hosted on that node.

Creates and exposes replicas from DiskPools hosted on that node over NVMe-TCP.

Provides a gRPC interface service to orchestrate the creation, deletion and management of the above objects, hosted on that node. Before the io-engine pod starts running, an init container attempts to verify connectivity to the agent-core in the namespace where Mayastor has been deployed. If a connection is established, the io-engine pod registers itself over gRPC to the agent-core. In this way, the agent-core maintains a registry of nodes and their supported api-versions. The scheduling of these pods is determined declaratively by using a DaemonSet specification. By default, a nodeSelector field is used within the pod spec to select all worker nodes to which the user has attached the label

The csi-node pods within a cluster implement the node plugin component of Mayastor's CSI driver. As such, their function is to orchestrate the mounting of Mayastor-provisioned volumes on worker nodes on which application pods consuming those volumes are scheduled. By default, a csi-node pod is scheduled on every node in the target cluster, as determined by a DaemonSet resource of the same name. Each of these pods encapsulates two containers, csi-node, and csi-driver-registrar. The node plugin does not need to run on every worker node within a cluster and this behavior can be modified, if desired, through the application of appropriate node labeling and the addition of a corresponding nodeSelector entry within the pod spec of the csi-node DaemonSet. However, it should be noted that if a node does not host a plugin pod, then it will not be possible to schedule an application pod on it, which is configured to mount Mayastor volumes.

etcd is a distributed reliable key-value store for the critical data of a distributed system. Mayastor uses etcd as a reliable persistent store for its configuration and state data.

The supportability tool is used to create support bundles (archive files) by interacting with multiple services present in the cluster where Mayastor is installed. These bundles contain information about Mayastor resources like volumes, pools and nodes, and can be used for debugging. The tool can collect the following information:

Topological information of Mayastor's resource(s) by interacting with the REST service

Historical logs by interacting with Loki. If Loki is unavailable, it interacts with the kube-apiserver to fetch logs.

Mayastor-specific Kubernetes resources by interacting with the kube-apiserver

Mayastor-specific information from etcd (internal) by interacting with the etcd server.

aggregates and centrally stores logs from all Mayastor containers which are deployed in the cluster.

is a log collector built specifically for Loki. It uses the configuration file for target discovery and includes analogous features for labeling, transforming, and filtering logs from containers before ingesting them to Loki.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

When a node allocates storage capacity for a replica of a persistent volume (PV) it does so from a DiskPool. Each node may create and manage zero, one, or more such pools. The ownership of a pool by a node is exclusive. A pool can manage only one block device, which constitutes the entire data persistence layer for that pool and thus defines its maximum storage capacity.

A pool is defined declaratively, through the creation of a corresponding DiskPool custom resource on the cluster. The DiskPool must be created in the same namespace where Mayastor has been deployed. User configurable parameters of this resource type include a unique name for the pool, the node name on which it will be hosted and a reference to a disk device which is accessible from that node. The pool definition requires the reference to its member block device to adhere to a discrete range of schemas, each associated with a specific access mechanism/transport/ or device type.

spec.disks under DiskPool CROnce a node has created a pool it is assumed that it henceforth has exclusive use of the associated block device; it should not be partitioned, formatted, or shared with another application or process. Any pre-existing data on the device will be destroyed.

A RAM drive isn't suitable for use in production as it uses volatile memory for backing the data. The memory for this disk emulation is allocated from the hugepages pool. Make sure to allocate sufficient additional hugepages resource on any storage nodes which will provide this type of storage.

To get started, it is necessary to create and host at least one pool on one of the nodes in the cluster. The number of pools available limits the extent to which the synchronous N-way mirroring (replication) of PVs can be configured; the number of pools configured should be equal to or greater than the desired maximum replication factor of the PVs to be created. Also, while placing data replicas ensure that appropriate redundancy is provided. Mayastor's control plane will avoid placing more than one replica of a volume on the same node. For example, the minimum viable configuration for a Mayastor deployment which is intended to implement 3-way mirrored PVs must have three nodes, each having one DiskPool, with each of those pools having one unique block device allocated to it.

Using one or more the following examples as templates, create the required type and number of pools.

The status of DiskPools may be determined by reference to their cluster CRs. Available, healthy pools should report their State as online. Verify that the expected number of pools have been created and that they are online.

Mayastor dynamically provisions PersistentVolumes (PVs) based on StorageClass definitions created by the user. Parameters of the definition are used to set the characteristics and behaviour of its associated PVs. For a detailed description of these parameters see . Most importantly StorageClass definition is used to control the level of data protection afforded to it (that is, the number of synchronous data replicas which are maintained, for purposes of redundancy). It is possible to create any number of StorageClass definitions, spanning all permitted parameter permutations.

We illustrate this quickstart guide with two examples of possible use cases; one which offers no data redundancy (i.e. a single data replica), and another having three data replicas.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

If all verification steps in the preceding stages were satisfied, then Mayastor has been successfully deployed within the cluster. In order to verify basic functionality, we will now dynamically provision a Persistent Volume based on a Mayastor StorageClass, mount that volume within a small test pod which we'll create, and use the utility within that pod to check that I/O to the volume is processed correctly.

Use kubectl to create a PVC based on a StorageClass that you created in the . In the example shown below, we'll consider that StorageClass to have been named "mayastor-1". Replace the value of the field "storageClassName" with the name of your own Mayastor-based StorageClass.

For the purposes of this quickstart guide, it is suggested to name the PVC "ms-volume-claim", as this is what will be illustrated in the example steps which follow.

If you used the storage class example from previous stage, then volume binding mode is set to WaitForFirstConsumer. That means, that the volume won't be created until there is an application using the volume. We will go ahead and create the application pod and then check all resources that should have been created as part of that in kubernetes.

The Mayastor CSI driver will cause the application pod and the corresponding Mayastor volume's NVMe target/controller ("Nexus") to be scheduled on the same Mayastor Node, in order to assist with restoration of volume and application availabilty in the event of a storage node failure.

In this version, applications using PVs provisioned by Mayastor can only be successfully scheduled on Mayastor Nodes. This behaviour is controlled by the local: parameter of the corresponding StorageClass, which by default is set to a value of true. Therefore, this is the only supported value for this release - setting a non-local configuration may cause scheduling of the application pod to fail, as the PV cannot be mounted to a worker node other than a MSN. This behaviour will change in a future release.

We will now verify the Volume Claim and that the corresponding Volume and Mayastor Volume resources have been created and are healthy.

The status of the PVC should be "Bound".

The status of the volume should be "online".

Verify that the pod has been deployed successfully, having the status "Running". It may take a few seconds after creating the pod before it reaches that status, proceeding via the "ContainerCreating" state.

We now execute the FIO Test utility against the Mayastor PV for 60 seconds, checking that I/O is handled as expected and without errors. In this quickstart example, we use a pattern of random reads and writes, with a block size of 4k and a queue depth of 16.

If no errors are reported in the output then Mayastor has been correctly configured and is operating as expected. You may create and consume additional Persistent Volumes with your own test applications.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

The node drain functionality marks the node as unschedulable and then gracefully moves all the volume targets off the drained node. This feature is in line with the node drain functionality of Kubernetes.

To start the drain operation, execute:

kubectl-mayastor drain node <node_name> <label>To get the list of nodes on which the drain operation has been performed, execute:

kubectl-mayastor get drain nodesTo halt the drain operation or to make the node schedulable again, execute:

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

The Mayastor pool metrics exporter runs as a sidecar container within every io-engine pod and exposes pool usage metrics in Prometheus format. These metrics are exposed on port 9502 using an HTTP endpoint /metrics and are refreshed every five minutes.

When is activated, the stats exporter operates within the obs-callhome-stats container, located in the callhome pod. The statistics are made accessible through an HTTP endpoint at port 9090, specifically using the /stats route.

To install, add the Prometheus-stack helm chart and update the repo.

Then, install the Prometheus monitoring stack and set prometheus.prometheusSpec.serviceMonitorSelectorNilUsesHelmValues to false. This enables Prometheus to discover custom ServiceMonitor for Mayastor.

Next, install the ServiceMonitor resource to select services and specify their underlying endpoint objects.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

With the previous versions, the control plane ensured replica redundancy by monitoring all volume targets and checking for any volume targets that were in Degraded state, indicating that one or more replicas of that volume targets were faulty. When a matching volume targets is found, the faulty replica is removed. Then, a new replica is created and added to the volume targets object. As part of adding the new child data-plane, a full rebuild was initiated from one of the existing Online replicas. However, the drawback to the above approach was that even if a replica was inaccessible for a short period (e.g., due to a node restart), a full rebuild was triggered. This may not have a significant impact on replicas with small sizes, but it is not desirable for large replicas.

The partial rebuild feature, overcomes the above problem and helps in achieving faster rebuild times. When volume target encounters IO error on a child/replica, it marks the child as Faulted (removing it from the I/O path) and begins to maintain a write log for all subsequent writes. The Core agent starts a default 10 minute wait for the replica to come back. If the child's replica is online again within timeout, the control-plane requests the volume target to online the child and add it to the IO path along with a partial rebuild process using the aforementioned write log.

The data-plane handles both full and partial replica rebuilds. To view history of the rebuilds that an existing volume target has undergone during its lifecycle until now, you can use the given kubectl command.

To get the output in table format:

To get the output in JSON format:

For example: kubectl mayastor get rebuild-history e898106d-e735-4edf-aba2-932d42c3c58d -ojson

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

By default, Mayastor collects basic information related to the number and scale of user-deployed instances. The collected data is anonymous and is encrypted at rest. This data is used to understand storage usage trends, which in turn helps maintainers prioritize their contributions to maximize the benefit to the community as a whole.

A summary of the information collected is given below:

The collected information is stored on behalf of the OpenEBS project by DataCore Software Inc. in data centers located in Texas, USA.

To disable collection of usage data or generation of events, the following Helm command, along with the flag, can either be executed during installation or can be re-executed post-installation.

To disable the collection of data metrics from the cluster, add the following flag to the Helm install command.

When eventing is enabled, NATS pods are created to gather various events from the cluster, including statistical metrics such as pools created. To deactivate eventing within the cluster, include the following flag in the Helm installation command.

This section provides an overview of the topology and function of the Mayastor data plane. Developer level documentation is maintained within the project's GitHub repository.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

An instance of the mayastor binary running inside a Mayastor container, which is encapsulated by a Mayastor Pod.

Mayastor terminology. A data structure instantiated within a Mayastor instance which performs I/O operations for a single Mayastor volume. Each nexus acts as an NVMe controller for the volume it exports. Logically it is composed chiefly of a 'static' function table which determines its base I/O handling behaviour (held in common with all other nexus of the cluster), combined with configuration information specific to the Mayastor volume it , such as the identity of its . The function of a nexus is to route I/O requests for its exported volume which are received on its host container's target to the underlying persistence layer, via any applied transformations ("data services"), and to return responses to the calling initiator back along that same I/O path.

Mayastor's volume management abstraction. Block devices contributing storage capacity to a Mayastor deployment do so by their inclusion within configured storage pools. Each Mayastor node can host zero or more pools and each pool can "contain" a single base block device as a member. The total capacity of the pool is therefore determined by the size of that device. Pools can only be hosted on nodes running an instance of a mayastor pod.

Multiple volumes can share the capacity of one pool but thin provisioning is not supported. Volumes cannot span multiple pools for the purposes of creating a volume larger in size than could be accommodated by the free capacity in any one pool.

Internally a storage pool is an implementation of an SPDK

A code abstraction of a block-level device to which I/O requests may be sent, presenting a consistent device-independent interface. Mayastor's bdev abstraction layer is based upon that of Intel's (SPDK).

base bdev - Handles I/O directly, e.g. a representation of a physical SSD device

logical volume - A bdev representing an ("lvol bdev")

Mayastor terminology. An lvol bdev (a "logical volume", created within a pool and consuming pool capacity) which is being exported by a Mayastor instance, for consumption by a nexus (local or remote to the exporting instance) as a "child"

Mayastor terminology. A NVMe controller created and owned by a given Nexus and which handles I/O downstream from the nexus' target, by routing it to a replica associated with that child.

A nexus has a minimum of one child, which must be local (local: exported as a replica from a pool hosted by the same mayastor instance as hosts the nexus itself). If the Mayastor volume being exported by the nexus is derived from a StorageClass with a replication factor greater than 1 (i.e. synchronous N-way mirroring is enabled), then the nexus will have additional children, up to the desired number of data copies.

To allow the discovery of, and acceptance of I/O for, a volume by a client initiator, over a Mayastor storage target.

For volumes based on a StorageClass defined as having a replication factor of 1, a single data copy is maintained by Mayastor. The I/O path is largely (entirely, if using malloc:/// pool devices) constrained to within the bounds of a single mayastor instance, which hosts both the volume's nexus and the storage pool in use as its persistence layer.

Each mayastor instance presents a user-space storage target over NVMe-oF TCP. Worker nodes mounting a Mayastor volume for a scheduled application pod to consume are directed by Mayastor's CSI driver implementation to connect to the appropriate transport target for that volume and perform discovery, after which they are able to send I/O to it, directed at the volume in question. Regardless of how many volumes, and by extension how many nexus a mayastor instance hosts, all share the same target instances.

Application I/O received on a target for a volume is passed to the virtual bdev at the front-end of the nexus hosting that volume. In the case of a non-replicated volume, the nexus is composed of a single child, to which the I/O is necessarily routed. As a virtual bdev itself, the child handles the I/O by routing it to the next device, in this case the replica that was created for this child. In non-replicated scenarios, both the volume's nexus and the pool which hosts its replica are co-located within the same mayastor instance, hence the I/O is passed from child to replica using SPDK bdev routines, rather than a network level transport. At the pool layer, a blobstore maps the lvol bdev exported as the replica concerned to the base bdev on which the pool was constructed. From there, other than for malloc:/// devices, the I/O passes to the host kernel via either aio or io_uring, thence via the appropriate storage driver to the physical disk device.

The disk devices' response to the I/O request is returns back along the same path to the caller's initiator.

If the StorageClass on which a volume is based specifies a replication factor of greater than one, then a synchronous mirroring scheme is employed to maintain multiple redundant data copies. For a replicated volume, creation and configuration of the volume's nexus requires additional orchestration steps. Prior to creating the nexus, not only must a local replica be created and exported as for the non-replicated case, but the requisite count of additional remote replicas required to meet the replication factor must be created and exported from Mayastor instances other than that hosting the nexus itself. The control plane core-agent component will select appropriate pool candidates, which includes ensuring sufficient available capacity and that no two replicas are sited on the same Mayastor instance (which would compromise availability during co-incident failures). Once suitable replicas have been successfully exported, the control plane completes the creation and configuration of the volume's nexus, with the replicas as its children. In contrast to their local counterparts, remote replicas are exported, and so connected to by the nexus, over NVMe-F using a user-mode initiator and target implementation from the SPDK.

Write I/O requests to the nexus are handled synchronously; the I/O is dispatched to all (healthy) children and only when completion is acknowledged by all is the I/O acknowledged to the calling initiator via the nexus front-end. Read I/O requests are similarly issued to all children, with just the first response returned to the caller.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

2MiB-sized Huge Pages must be supported and enabled on the mayastor storage nodes. A minimum number of 1024 such pages (i.e. 2GiB total) must be available exclusively to the Mayastor pod on each node, which should be verified thus:

If fewer than 1024 pages are available then the page count should be reconfigured on the worker node as required, accounting for any other workloads which may be scheduled on the same node and which also require them. For example:

This change should also be made persistent across reboots by adding the required value to the file/etc/sysctl.conf like so:

If you modify the Huge Page configuration of a node, you MUST either restart kubelet or reboot the node. Mayastor will not deploy correctly if the available Huge Page count as reported by the node's kubelet instance does not satisfy the minimum requirements.

All worker nodes which will have Mayastor pods running on them must be labelled with the OpenEBS engine type "mayastor". This label will be used as a node selector by the Mayastor Daemonset, which is deployed as a part of the Mayastor data plane components installation. To add this label to a node, execute:

If you set csi.node.topology.nodeSelector: true, then you will need to label the worker nodes accordingly to csi.node.topology.segments. Both csi-node and agent-ha-node Daemonsets will include the topology segments into the node selector.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

The Mayastor kubectl plugin can be used to view and manage Mayastor resources such as nodes, pools and volumes. It is also used for operations such as scaling the replica count of volumes.

The Mayastor kubectl plugin is available for the Linux platform. The binary for the plugin can be found .

Add the downloaded Mayastor kubectl plugin under $PATH.

To verify the installation, execute:

Sample command to use kubectl plugin:

You can use the plugin with the following options:

All the above resource information can be retrieved for a particular resource using its ID. The command to do so is as follows: kubectl mayastor get <resource_name> <resource_id>

Table is the default output format.

The plugin requires access to the Mayastor REST server for execution. It gets the master node IP from the kube-config file. In case of any failure, the REST endpoint can be specified using the ‘–rest’ flag.

The plugin currently does not have authentication support.

The plugin can operate only over HTTP.

This website/page will be End-of-life (EOL) after 31 August 2024. We recommend you to visit OpenEBS Documentation for the latest Mayastor documentation (v2.6 and above).

Mayastor is now also referred to as OpenEBS Replicated PV Mayastor.

Storage class resource in Kubernetes is used to supply parameters to volumes when they are created. It is a convenient way of grouping volumes with common characteristics. All parameters take a string value. Brief explanation of each supported Mayastor parameter follows.

File system that will be used when mounting the volume. The supported file systems are ext4, xfs and btrfs and the default file system when not specified is ext4. We recommend to use xfs that is considered to be more advanced and performant. Please ensure the requested filesystem driver is installed on all worker nodes in the cluster before using it.

The parameter 'protocol' takes the value nvmf(NVMe over TCP protocol). It is used to mount the volume (target) on the application node.

The string value should be a number and the number should be greater than zero. Mayastor control plane will try to keep always this many copies of the data if possible. If set to one then the volume does not tolerate any node failure. If set to two, then it tolerates one node failure. If set to three, then two node failures, etc.

The volumes can either be thick or thin provisioned. Adding the thin parameter to the StorageClass YAML allows the volume to be thinly provisioned. To do so, add thin: true under the parameters spec, in the StorageClass YAML. When the volumes are thinly provisioned, the user needs to monitor the pools, and if these pools start to run out of space, then either new pools must be added or volumes deleted to prevent thinly provisioned volumes from getting degraded or faulted. This is because when a pool with more than one replica runs out of space, Mayastor moves the largest out-of-space replica to another pool and then executes a rebuild. It then checks if all the replicas have sufficient space; if not, it moves the next largest replica to another pool, and this process continues till all the replicas have sufficient space.

The agents.core.capacity.thin spec present in the Mayastor helm chart consists of the following configurable parameters that can be used to control the scheduling of thinly provisioned replicas:

poolCommitment parameter specifies the maximum allowed pool commitment limit (in percent).

volumeCommitment parameter specifies the minimum amount of free space that must be present in each replica pool in order to create new replicas for an existing volume. This value is specified as a percentage of the volume size.

volumeCommitmentInitial minimum amount of free space that must be present in each replica pool in order to create new replicas for a new volume. This value is specified as a percentage of the volume size.

stsAffinityGroup represents a collection of volumes that belong to instances of Kubernetes StatefulSet. When a StatefulSet is deployed, each instance within the StatefulSet creates its own individual volume, which collectively forms the stsAffinityGroup. Each volume within the stsAffinityGroup corresponds to a pod of the StatefulSet.

This feature enforces the following rules to ensure the proper placement and distribution of replicas and targets so that there isn't any single point of failure affecting multiple instances of StatefulSet.